We all know that in the near future humanity will come to a crossroads. With 99% of the world’s population currently tasked with creating memes and/or dank memes, what will happen when computers get better at it than humans? Researchers may have just found out.

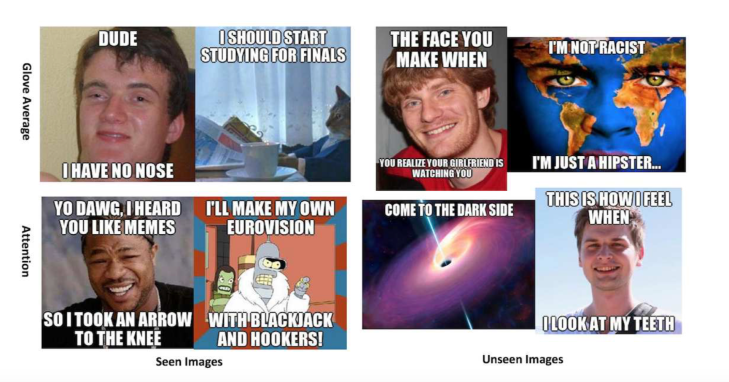

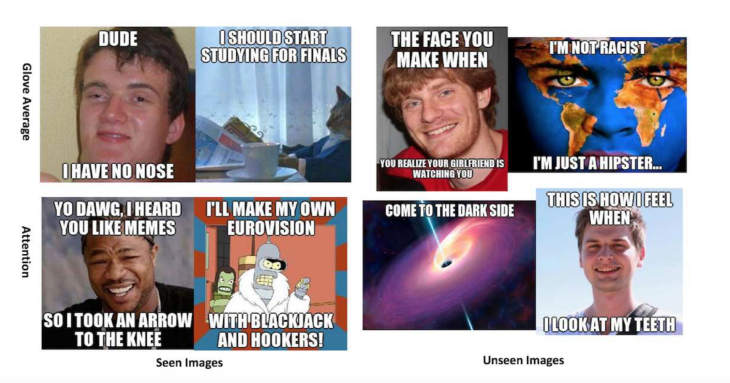

Using machine learning, a pair of Stanford researchers, Abel L. Peirson V and E. Meltem Tolunay, have created a system that automatically generates memes including the ones visible above. Their system, they’ve discovered “produces original memes that cannot on the whole be differentiated from real ones.”

You can read the report here.

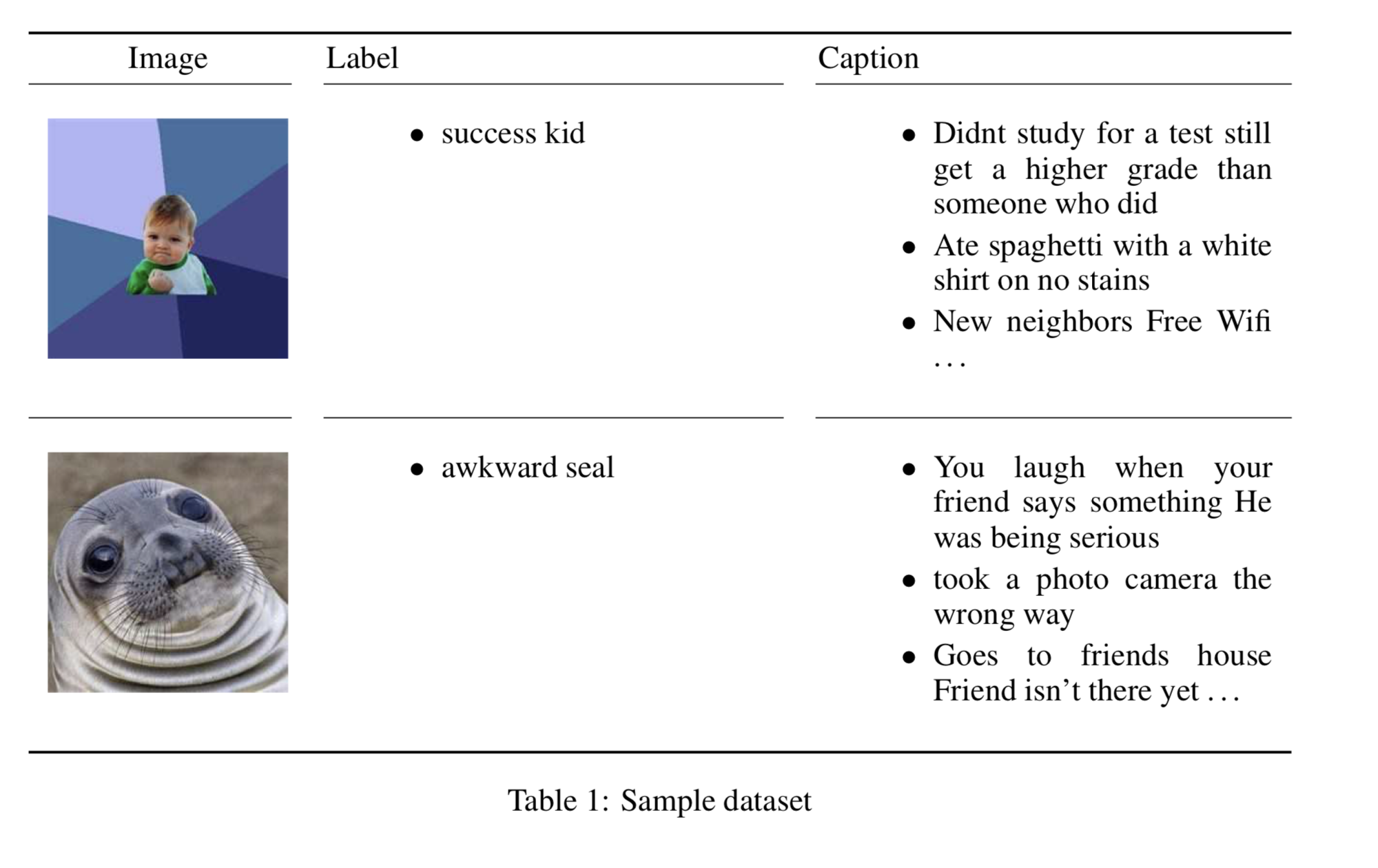

The system uses a pre-trained Inception-v3 network using the long short-term memory model to produce captions that are applicable to a particular picture. Humans then assess the humor of the meme, rewarding the system for true LOLs.

The researchers trained the network with “400.000 image, label and caption triplets with 2600 unique image-label pairs” including funny memes generated by actual humans. The system then recreates memes in a similar vein.

Does it work? Yes, it does, but I doubt it will replace human meme-workers any time soon. Humanity, it seems, is safe… for now.

We acknowledge that one of the greatest challenges in our project and other language modeling tasks is to capture humor, which varies across people and cultures. In fact, this constitutes a research area on its own, and accordingly new research ideas on this problem should be incorporated into the meme generation project in the future. One example would be to train on a dataset that includes the break point in the text between upper and lower for the image. These were chosen manually here and are important for the humor impact of the meme. If the model could learn the breakpoints this would be a huge improvement and could fully automate the meme generation. Another avenue for future work would be to explore visual attention mechanisms that operate on the images and investigate their role in meme generation tasks.

Sadly, however, we still cannot trust our robotic meme overlords not to be nasty.

“Lastly we note that there was a bias in the dataset towards expletive, racist and sexist memes, so yet another possibility for future work would be to address this bias,” wrote the researchers.

COMMENTS