Instagram and its users do benefit from the app’s ownership by Facebook, which invests tons in new artificial intelligence technologies. Now that AI could help keep Instagram more tolerable for humans. Today Instagram announced a new set of antii-cyberbullying features. Most importantly, it can now use machine learning to optically scan photos posted to the app to detect bullying and send the post to Instagram’s community moderators for review. That means harassers won’t be able to just scrawl out threatening or defamatory notes and then post a photo of them to bypass Instagram’s text filters for bullying.

In his first blog post directly addressing Instagram users, the division’s newly appointed leader Adam Mosseri writes “There is no place for bullying on Instagram . . . As the new Head of Instagram, I’m proud to build on our commitment to making Instagram a kind and safe community for everyone.”

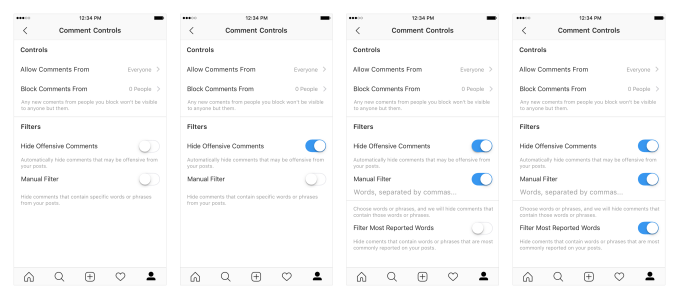

Instagram users will see the “Hide Offensive Comments” setting defaulted on in their settings. They can also opt to manually list out words they want to filter out of their comments, and can choose to auto-filter the most commonly reported words.

Meanwhile, Instagram is expanding its proactive filter for bullying in comment from the feed, Explore, and profile to also protect Live broadcasts. It’s launching a “Kindness” camera effect in partnership with Maddie Ziegler, best known as the child dancer version of Sia from her music video “Chandelier”.

COMMENTS